Get NPTEL Machine Learning Week 11 answers at nptel.answergpt.in. Our expert-verified solutions help you understand key concepts, simplify complex topics and enhance your assignment performance with accuracy and confidence.

📌 Subject: Machine Learning

📌 Week: 11

📌 Session: NPTEL 2025

📌 Course Link: NPTEL Machine Learning

📌 Reliability: Expert-reviewed answers

We recommend using these answers as a reference to verify your solutions. For complete, detailed solutions for all weeks, visit – [Week 1-12] NPTEL Introduction to Machine Learning Assignment Answers 2025.

🚀 Stay ahead in your NPTEL journey with updated solutions every week!

| Week-by-Week NPTEL Machine Learning Assignments in One Place |

|---|

| Machine Learning Week 1 Answers |

| Machine Learning Week 2 Answers |

| Machine Learning Week 3 Answers |

| Machine Learning Week 4 Answers |

| Machine Learning Week 5 Answers |

| Machine Learning Week 6 Answers |

| Machine Learning Week 7 Answers |

| Machine Learning Week 8 Answers |

| Machine Learning Week 9 Answers |

| Machine Learning Week 10 Answers |

| Machine Learning Week 11 Answers |

| Machine Learning Week 12 Answers |

Introduction to Machine Learning Week 11 NPTEL Assignment Answers 2025

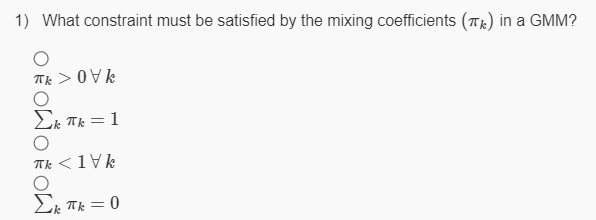

1.

Answer :- b

2. The EM algorithm is guaranteed to decrease the value of its objective function on any iteration.

- True

- False

Answer :- b

3. Why might the EM algorithm for GMMs converge to a local maximum rather than the global maximum of the likelihood function?

- The algorithm is not guaranteed to increase the likelihood at each iteration

- The likelihood function is non-convex

- The responsibilities are incorrectly calculated

- The number of components K is too small

Answer :- b

4. What does soft clustering mean in GMMs?

- There may be samples that are outside of any cluster boundary.

- The updates during maximum likelihood are taken in small steps, to guarantee convergence.

- It restricts the underlying distribution to be gaussian.

- Samples are assigned probabilities of belonging to a cluster.

Answer :- d

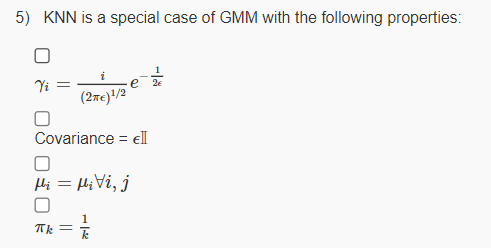

5.

Answer :- d

6. We apply the Expectation Maximization algorithm to f(D,Z,θ) where D denotes the data, Z denotes the hidden variables and θ the variables we seek to optimize. Which of the following are correct?

- EM will always return the same solution which may not be optimal

- EM will always return the same solution which must be optimal

- The solution depends on the initialization

Answer :- c

7. True or False: Iterating between the E-step and M-step of EM algorithms always converges to a local optimum of the likelihood.

- True

- False

Answer :- a

8. The number of parameters needed to specify a Gaussian Mixture Model with 4 clusters, data of dimension 5, and diagonal covariances is:

- Lesser than 21

- Between 21 and 30

- Between 31 and 40

- Between 41 and 50

Answer :- d

Conclusion:

In this article, we have uploaded the Introduction to Machine Learning Week 11 NPTEL Assignment Answers. These expert-verified solutions are designed to help you understand key concepts, simplify complex topics, and enhance your assignment performance. Stay tuned for weekly updates and visit www.answergpt.in for the most accurate and detailed solutions.